Your First A/B Tests: A Step by Step Guide

Your A/B testing system is hot off the press, you've integrated with Optimizely, Google's Content Experiments, or maybe deployed some code of your own. You open your eyes, look around, and see a whole new world of experimental opportunities.

But suddenly, you start asking more questions and forming more hypotheses than one could possibly answer in a lifetime. Should you start testing 41 shades of background hues to see what's most attention grabbing? Maybe start by testing a rewrite of every piece of copy on your site? Or maybe you should start A/B/C/D testing your web fonts?

No.

Here's a step by step guide.

Step 1: Start by running an A/A test.

You're excited about A/B testing, but you've never run an experiment on your product. And since your A/B system is hot off the presses, everyone else in your organization will also be new to this.

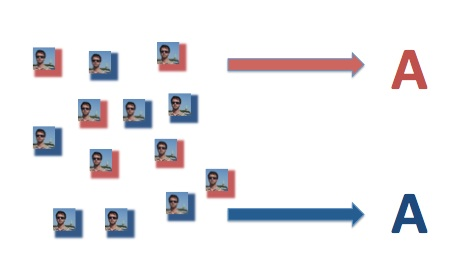

A/A tests are conceptually quite simple: the two variants are both A's, you've changed absolutely nothing, except you're exercising the A/B system by randomly assigning users to buckets. A fundamental component of A/B testing is the natural statistical variation that occurs between buckets. Running an A/A test is a great way to "see" what this variation naturally looks like. You'll be expecting there to be zero variation between your variants, but there won't be.

So as your first step, run an "empty" A/A test, watch your results for a week, and try to familiarize yourself with some basic statistics behind A/B testing.

Step 2: A/B test a single feature release.

You make changes to your site constantly. Whitespace changes, bug fixes, tool tips, are a big part of keeping your product quality high, but most of the time will have no impact on metrics like time on site, conversion rate, bounce rate, etc. Don't start by testing these.

Some aspects of your product get a high amount of traffic (say, your homepage or search page), and some components are mission critical to your business (in an e-commerce setting, your checkout funnel). Yet other components are neither high traffic nor mission critical: for example, your about page.

So start by picking a relatively simple front-end change affecting a high traffic page. For example, suppose your social media share button has some slick share logo, and you'd like to understand if clarifying the logo gets people to share more often.

Control:

Test:

Why is this a good test? First, the button appears on every content page on the site. Second, copy changes can oftentimes have larger impacts on users behavior than you'd expect. And finally, making this change is really simple. Your first true A/B experiment should focus on methodology and outcome; don't test something that may be buggy or harder to integrate. Tools like Optimizely or Google's Content Experiments are great for testing visual changes like the one above, but are much harder (or impossible) to test with things like search ranking, recommendations, or email.

Step 3: Iterate.

As you run more and more tests, you'll start to learn which tests move the needle and which tests don't.

If a test showed positive improvements, ask yourself if you can further improve on the result. If the "Share" copy above increased tweets by 100%, consider changing the background color from a dull gray to something more noticeable.

If a test showed little to no change, take a step back and ask the following:

- Is volume too small to matter? Say your site gets tweeted 10,000 times per month, and your change increases this rate by 1%. That's 100 additional tweets, probably not worth dwelling on.

- Is the change at all impactful? You changed your share button background to hot pink, but people aren't sharing any more than when it was gray. Perhaps the original problem with the share button was just one of understanding and not discovering.

- Is the change measurable? Some changes you make are brand or design focused. A new logo design shouldn't show any significant differences in your A/B metrics. Maybe you shouldn't have tested this in the first place.

- Are you measuring the right things? Not every change you make will affect your site's overall conversion rate. If you're testing search ranking changes, you'd expect people to click higher in the result set if quality has improved.

This case is the hardest to analyze and can be frustrating. Dig deeper into what's going on, run some user tests (I love usertesting.com), talk to your customers, and use your brain.

Finally, many times the most useful of an experiment's three possible outcomes is that of a negative change. Here, the test shows that things got worse in a significant way. Volume is high enough, the change is negatively impactful and is directly measurable.

Revisit your key hypotheses and test assumptions, and question everything.

Step 4: Think strategically, test strategically.

A/B testing has lots of benefits, and the biggest in my opinion is learning. Think about your strategic initiatives and develop larger hypotheses for your product and business.

You've run three experiments to try to get users to share your content more on Twitter, and each has shown no changes. Maybe your site isn't as social as you thought. You have a gifting site and people actually don't like sharing presents on Twitter.

Also think about strategic testing. You're worried that your site is too slow, and engineering tells you they need six weeks to decrease average response from 800 milliseconds to 500. You look at the dozens of initiatives on your roadmap, and ask if this is really necessary. As step zero, instead of trying to speed up the site, you slow it down. Add a 200ms timeout to every request, and measure engagement, etc. with an average response time of 1000ms.

Or maybe you're concerned with the quality of your homepage recommendation module: try an experiment in which the module shows popular content 50% of the time and existing personalized content the other 50%.

Your business as an experiment

As any successful entrepreneur or CEO will tell you, scaling your business requires learning, and learning requires an open mind. A/B testing can be a great tool for experimenting with your business, exposing you to new findings and opportunities. And when used properly and in the right context, proper experimentation can be eye opening.

Thanks to the product and engineering team @ Loverly, and to Dan McKinley and Greg Fodor for reading drafts of this.